The future of branch banking is unclear. Some are calling for its death. Others are saying branches are still critical to a bank’s success. One thing is clear—things are changing fast.

Many banks are well on their way to implementing their branch of the future, while others are still trying to figure out what makes sense for them. This could be a dangerous path if you are waiting to see what works before diving in. Customer loyalty is dropping, big banks are trying new ways to engage your customers and FinTech companies are developing simple solutions to customers’ problems. Can you afford to take a wait-and-see approach and hope to win on products and services?

But it is hard to know which approaches will work. How can you effectively test out how customers will respond to different approaches while managing costs? One approach many companies take is running experiments. Let’s dive in and see the impact of experiments and how to run them effectively.

Innovation through experiments is happening today

Banks of all sizes are running experiments all the time to better understand how to provide great products, service and engagement at branches.

Let’s take a look at a few examples.

Bank of America

It is well known that Bank of America started using experiments in their retail branches in the early and mid-2000s. (Here is a great HBR article covering details of what they did.) They created an Innovation and Development (I&D) team that developed a process for executing experiments in a live retail setting. They have executed numerous experiments that have not only led to improved products and services, but also a much deeper understanding of the services they provide and the impact on customer behavior. Today the I&D model is common in many large and mid-size banks.

PNC Bank

More recently (since 2013), PNC Bank has been experimenting with pop-up branches. The pop-up branch allows PNC to take a high-tech branch to places where people already congregate, instead of waiting for customers and prospects to happen by their branch. They don’t have to take the time to build a full branch, but can test to see if the location warrants one. The “branch” is tiny (160 square feet) and can fit on a sidewalk or in a shopping mall open space. It offers many of the traditional services of a branch, but also acts as a place to showcase PNC's latest retail technology. While there aren’t a lot of details on the experiment, it seems to be having some success, since PNC has rolled out pop-up branches in Pittsburgh, Chicago, Atlanta and most recently, at West Virginia University in Morgantown, WV.

America First Credit Union

But it isn’t only the big guys who are experimenting. I met some folks at America First Credit Union who told me about their innovation center. It is set up in a mall in downtown Salt Lake City, UT, so the visitors are diverse. America First customers can use it like a traditional branch, but they are using some of the latest tech that banking has to offer. So the center acts as both a branch and a lab. America First employees observe customers and prospects using the new technology to see what is working. They also get instant, direct feedback on each piece of tech in the center—whether it is effective, who it is effective for, where to place it in a branch and more. This data is used to help them understand new technology adoption and feeds into America First’s retail strategy.

These are just a few examples of banks experimenting with new tech, products and services to find which have the impact they need to make their branches successful. But how can they be sure these experiments will pay off?

How to execute experiments effectively

Executing effective experiments is not easy to do. Many people look to R&D labs to understand how to do this, which makes sense given they take a scientific method approach to learning. However, this is done in labs—a controlled environment where it is much easier to isolate the impact of different variables in an experiment. But this controlled environment lacks the dynamics, the variety of customer types, volume of traffic and the reality of a real branch.

I think there is a better learning platform that has been developed over the last several years—Lean Startup. It combines ideas from customer centricity, product management and lean manufacturing to optimize the learning cycle. And it is designed to help make business decisions in the real world, not in a lab.

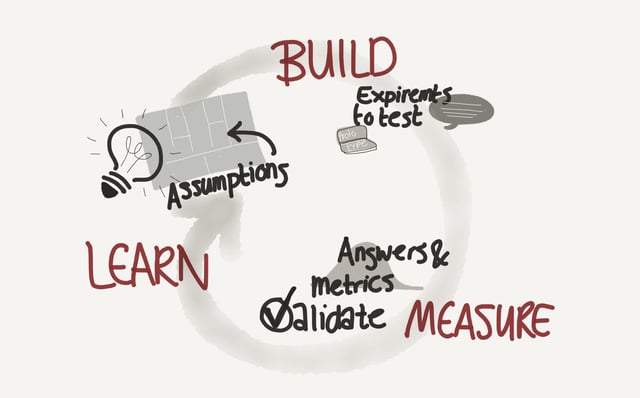

Lean Startup is all about learning fast using a model called Build - Measure - Learn.

From: NEXT Amsterdam

This model assumes you won’t run a single experiment and learn everything you need to know, but that you will need multiple experiments and will iterate to success. It rewards companies that optimize for learning when the best path forward is unclear. The faster you can execute effective experiments, the faster you can learn what works and what doesn’t.

Okay, so how do you execute an experiment? The following section provides a template.

Develop a Hypothesis

Hypotheses begin as guesses. It is usually based on someone’s observation of the current state of some process or product feature or message, etc. They believe that, given the current state, if they change some variable a different outcome will occur. So they are making a prediction.

Predictions are often stated in the form of...

“If [specific action] then [expected measurable outcome]”

In other words, if variable X is changed, then outcome Y is expected.

For this approach to be effective, you really need to know what current state looks like. What are the metrics that let you know what current state is? Can you isolate the variable you want to change to validate the outcome? This gets messy outside the lab, and real-world experiments aren’t as rigorous as lab experiments, but you should strive to make them as effective as possible.

Also, the hypothesis must be falsifiable. You must be able to show that your experiment succeeded or failed. Reducing ambiguity is critical. To help with this, you can define not only what success looks like, but also what constitutes failure. Clear definitions can be useful in situations where it is hard to isolate the variable in your hypothesis.

Define Your Metrics

To validate, or invalidate, your experiment you must define the metrics you are going to measure. The success / failure condition of your hypothesis must be based on these metrics. Ideally these will be quantitative metrics because qualitative metrics are harder to gather and analyze. That said, using affinity grouping and other techniques, qualitative data can be turned into quantitative. Also, qualitative measures can be useful to feel out if you are on the right track. For instance, you might question 10 customers to see if you can validate your hypothesis before executing a full-scale experiment.

Avoid vanity metrics! These are metrics that appear to show improvement and make you feel good, but don’t create business results you are seeking. A simple example is a subscription-based website improving their SEO and getting more visitors. If those visitors aren’t paying for subscriptions, does it matter that there are more visitors? A better metric would be new subscriptions. Or if the goal is improving the quality of the visitor, then measuring the ratio of subscriptions to visitors would be a helpful metric.

Plan Your Experiment

To execute your experiment effectively, you need to plan it, just like you would a new project. An experiment may take a couple of people one week to execute, or it may take a large team and many months. You must ensure the correct resources are made available and have time to focus on the experiment. You must estimate the investment being made and have a sense of the potential business impact to make sure the experiment is worth executing. And you must design the experiment to ensure it isn’t stacked for success—it needs to be a real-world test.

This planning can often lead to analysis paralysis. To avoid this, time-box the time to plan. Make sure you have enough time to do a good job, but not enough time to make things perfect. Remember what Voltaire (and my boss) said: “The perfect is the enemy of the good.”

An Example Experiment

I know, enough with the abstract stuff, let’s have an example. This is a simple example to reinforce the experiment template. Much more detail than this would be needed to execute this experiment effectively.

The Scenario

We’ve noticed at our branches that it requires 1 full-time banker at each branch to handle new account openings. We think we can install kiosks and account origination software in our branches and remove the need for that full-time employee to do account opening.

Hypothesis

If we install self-service account origination kiosks in our branch, then we can maintain the rate of quality new account creation while requiring less than 25% of a full-time banker's support.

Metric

We could simply use the number of new accounts. However, this won’t give us a sense of the quality of the accounts opened. For instance, I could offer an iPad with every new account opening, but I may end up with much lower quality accounts due to the incentive. Instead, we need to look at not only the number of new accounts, but also the average account balance for those accounts and ensure it stays steady.

Plan

Given the metric we need to measure, we know this experiment must be run for at least a few months to gather meaningful data. You also need to find some branches that have similar account opening rates and account quality. Let’s say you have 4 of these, so you would use 2 to run the experiment and 2 to act as controls where you make no changes. These controls act as a baseline for comparing to your experiment branches.

Here are the steps needed:

- Build or buy a kiosk app, install and configure it: 2-4 months

- Train branch employees for experiment: 1-2 weeks

- This could take longer—branch employees may need time to adjust to the new tech to avoid the Hawthorne Effect and other biases

- Execute experiment: 12-16 weeks

- You need enough time to gather meaningful data. Looking at historical data should give a sense of the time period needed.

- Review results to determine if the hypothesis was proven and whether to continue, stop, or pivot: 1-2 weeks

The team should be meeting multiple times a week on a cadence to ensure the experiment is being executed correctly. If it is discovered that the experiment is not executing correctly, resist the urge to change things mid-experiment, because the data you gather will not be valid. Instead, start the experiment again to ensure you have good data. You don’t want to spend months on an experiment only to have your boss kill the idea because the data is questionable.

And don’t forget cost. Once you have a plan, you need to estimate the cost to execute it. And it doesn’t hurt to have a simple ROI model to show the benefits of the change.

Where to get help with your experiments

There are often groups in your company that are running these type of experiments that can provide help. Many marketing and product teams use A/B testing and other forms of experiments to test messaging, advertising and product features. Seek them out. You can also turn to partners experienced in market research and product development to help with executing experiments.

Feel free to contact us if you’d like to further discuss how experiments can help you create your branch of the future.

Digital is reinventing banking. Are you ready?

Key digital transformations can help your organization stand out and provide unique customer experiences.