All young children (and many adults) firmly believe that the world revolves around them—what I want is more important than what you want, etc. At its worst, people like this give the term “egocentric” a bad name. But when it comes to actions and sounds, egocentric associations have positive implications in the exciting world of advanced technologies.

First, a couple of definitions. Exocentric and egocentric are fancy-sounding words that describe a frame of reference. Exocentric reference frames are relative to an external object or plane, whereas egocentric are relative to the self. For example, “to the west” is an exocentric direction, but “right in front of you” is an egocentric one.

In technology, “swiping right” on your smartphone is an exocentric interaction because the gesture needs to be sensed by the screen. However, the “swipe right” interaction could be presented in an egocentric way. When using Microsoft’s Kinect, we are not limited to screen-based interactions. We can “swipe right” by gliding our arms through the air, and the system will register this gesture, regardless of where we are standing in the room. This is an egocentric interaction.

So what’s the link with sounds? When we gesture—strike a key or hit an object—we expect it to click or bang or rattle or ring, depending on the object and the gesture. After we experience the same interaction over and over again, we learn the association between that particular gesture and sound. For example, you may associate the sound of crumpling paper with a squeezing/crushing gesture.

My recently published work from the Auditory Perception Lab at Carnegie Mellon University provides evidence that action-sound associations are egocentric in nature. This finding could affect the design of new apps and how we use them.

Smartphones getting more egocentric

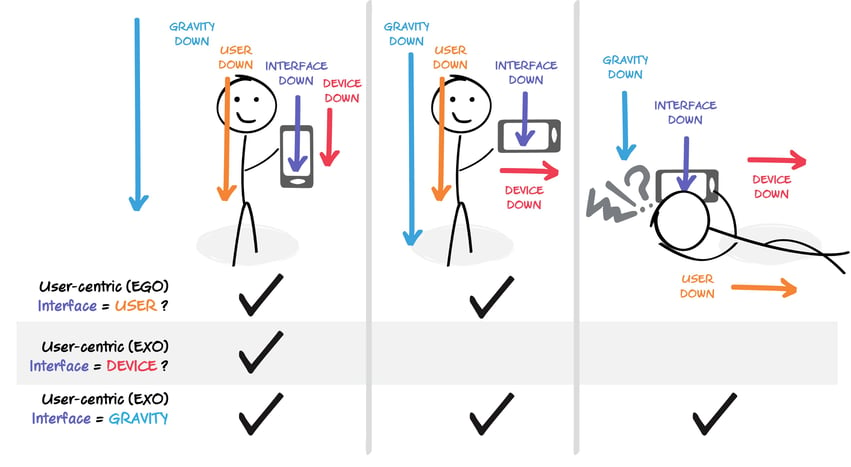

We just saw that smartphones are primarily exocentric due to their screen-based nature. To be fair, smartphones have tried to become more egocentric. Swiping “right” in the past would always be limited to swiping towards the right side of the device. Now, due the accelerometer, users can also reorient a device into landscape mode and swipe “right” towards the top of the device, making this interaction relatively egocentric. Of course, this interaction is not purely egocentric, and is actually “gravity-centric”. This is obvious to anyone who has tried to use their phone while lying on their side. The screen will wrongly re-orient because the phone is on its “side” relative to gravity—very annoying!

Smartphones are seemingly egocentric, because they rotate their interface when users place the device on the side. But in actuality, current smartphones are gravity-centric and will wrongly re-orient the screen if we try to use the device while lying on our side. Luckily, we tend to spend most of our day in an upright position.

Gestures, sounds and us

Besides natural links between gestures and sounds (crumpling paper), these associations can be artificially created and are used in technology every day. Pressing ATM keys produces certain tones. Plugging a device into your computer makes a confirmation sound. Scanning groceries produces that recognizable “bloop” sound. The list goes on. In fact, if you are a Mac user, the sound of crumpling paper may actually be associated with dragging a file into the trash can!

Despite the importance of gesture-sound pairings, little is known about their reference frames. Do we think of tone-producing ATM keys in terms of the keys themselves? Or do we think of it relative to our body’s gesture on the keys?

My research provides evidence that action-sound associations are egocentric, meaning that we think about the experience in terms of our own body.

Yeah, so what? It means that the “sound” of entering your ATM pin is associated with the gesture, regardless of your location and whether an ATM is actually present. The keys themselves do not actually matter.

Egocentrism for better learning

Previous design decisions were limited by our physical devices. “Press A to jump”, “Dial 0 for the operator”, or “Press the shutter button to snap a photo.” Now we can actually jump using a Kinect, we can say “Call Joe” using Google Now, or we can use hand gestures to trigger our camera. As technology becomes more and more user-centric, a fully egocentric frame of reference can become possible. But is this desirable?

According to my research, the answer is yes... if you want to create an association between a gesture and a sound. The best way for us to learn a relationship between a gesture and a sound is through an egocentric reference frame, because egocentric reference frames create a stronger gesture-sound association than exocentric ones.

Imagine that through our new partnership with Samsung, Summa was asked to design an innovative technology using the Gear VR headset. As an extremely simplified example, assume that we wanted to create a virtual reality system that taught users that looking “up” produced a high-pitched tone and looking “down” produced a low-pitched tone. Perhaps this is a music-making device or some sort of physical therapy tool.

According to my research, the ideal setup would be egocentric, meaning that “up” would not be defined as “towards the ceiling”, but would always be relative to the user’s head position. If the user were lying down on their back, then looking “up” would actually be towards a side wall. If the user were kneeling down to tie their shoe, looking “up” would be towards a different wall. Configuring the system in this way would create the strongest association between the gesture and the sound.

What's next?

As we incorporate more and more physical devices and sensors into our world, we will realize there are unanswered design questions to explore, such as this frame of reference concept. “Egocentric vs exocentric” did not need to be considered in the past, but will play a large part in future technology.

We don’t need to wait until these advanced technologies become commonplace before we start considering their implications. For example, just the wording of instructions can make people think about things in an egocentric or exocentric way. Let’s say here at Summa we were designing an app for Samsung’s Galaxy S7 Edge smartphone. For simplicity, we will assume the app is locked in portrait orientation and the user does not rotate their device. Throughout the experience, directions could say “Swipe Right” or “Swipe towards the Right Edge”. By simply emphasizing the device in the instructions, the interaction is perceived as more exocentric, despite being the same gesture!

We need to consider such design choices with care. My research findings, as well as other knowledge bases, need to be brought together to make the best design decision. Keeping up-to-date on the latest research and trends helps us make the most educated design choices, especially through the uncharted territories of tomorrow. The future is coming. Let’s design for it.